py爬虫-b站课程(入门的知识和基础的应用)

1.初识requests模块

1.1分类

通用爬虫:抓取的是一整张页面数据

聚焦爬虫:抓取的是页面中特定的局部内容

增量式爬虫:只会抓取网站中最新更新出来的数据,

1.2http和https

1.2.1http协议

就是服务器和客户端交互的一种形式

1.2.1.1常用的请求头信息

User-Agent:请求载体的身份标识

Connection:请求完毕后,是断开连接还是保持连接。close/keep alive

1.2.1.2常用的响应头信息

Content-Type:服务器响应回客户端的数据类型

1.2.2https

安全的超文本传输协议,就是加密之后的http

加密方式:

·对称秘钥加密

·非对称秘钥加密

·证书秘钥加密

2.requests模块

2.1如何使用

·指定url

·发起请求

·获取响应数据

·持久化存储

示例1:百度页面的数据

import requests

url = 'https://baidu.com/'

res = requests.get(url) #使用get请求

#返回一个字符串

print(res.text)

2.2根据案例开始深入学习

简易的网易采集器(根据关键字在搜索引擎中搜索)

import requests

key = input()

param = {'query':key}#可以吧参数封装到字典中

url = f'https://www.sogou.com/web'#这里的网址是经过简单处理的,观察url就可以发现,关键字在query参数后面。

#ua伪装

#注意这个handers必须是一个字典的形式

user_agent = {'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/97.0.4692.99 Safari/537.36 Edg/97.0.1072.69'}

#使用requests的时候用params参数来增加参数

res = requests.get(url,params=param,headers=user_agent)

print(res.text)

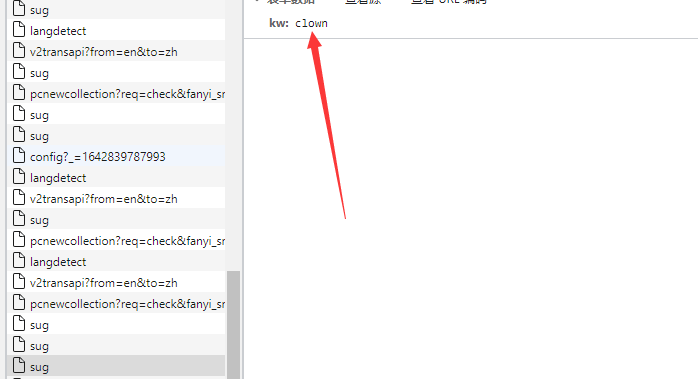

破解百度翻译

知识:阿贾克斯请求,AJAX 是一种用于创建快速动态网页的技术。

通过在后台与服务器进行少量数据交换,AJAX 可以使网页实现异步更新。这意味着可以在不重新加载整个网页的情况下,对网页的某部分进行更新。

百度翻译每一次翻译都会刷新页面,那我们找到对应的ajax请求就可以了

在分类中的xhr中就是ajax请求的分类。

把他的请求地址作为我们爬虫的请求地址

记住方法是post,我们的请求方法就要变成requests.post,

payload里面只有一个参数kw,我们要在请求的时候加入数据kwdata = {'kw':f'{key}'}记住也是字典模式

响应回来的数据是json数据,我们应该调用json方法返回(返回的是一个字典)。

import requests

import json

key = input()

url = 'https://fanyi.baidu.com/sug'

handers = {'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/97.0.4692.99 Safari/537.36 Edg/97.0.1072.69'}

data = {'kw':f'{key}'}

res = requests.post(url,headers=handers,data=data)

print(res.json())

#进行持久化存储

fp = open(f'./{key}.json','w',encoding = 'utf-8')

json.dump(res.json(),fp = fp,ensure_ascii=False)#把数据结构转换成json,因为有中文所以不能用ASCII编码。

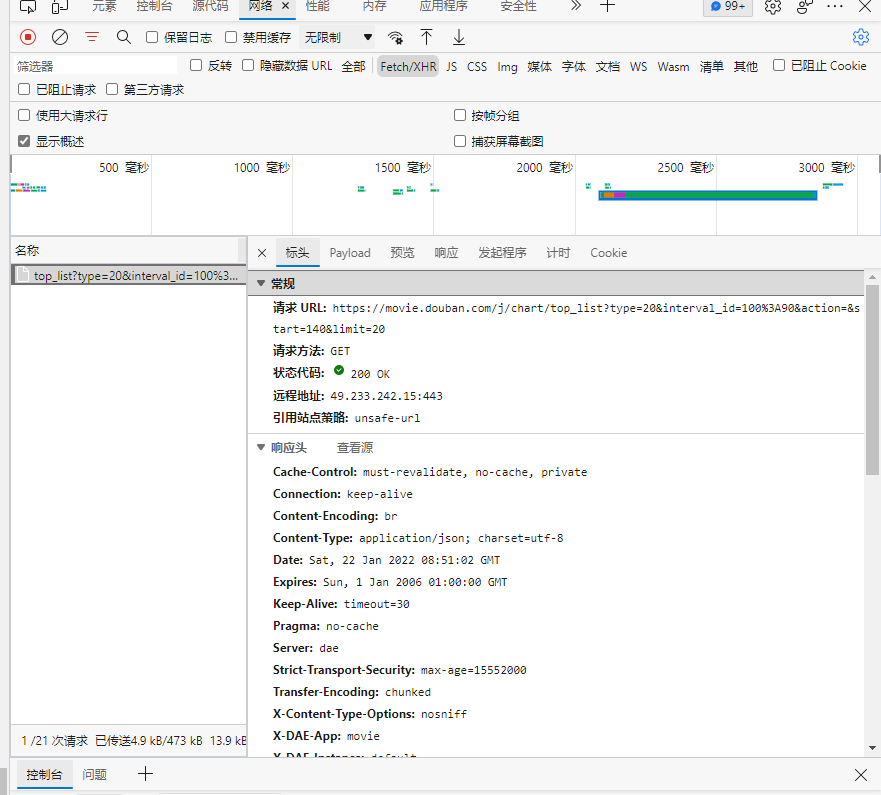

爬取豆瓣

每一次页面滑到底部的时候,就会出现新的电影,url没有变,那就是阿贾克斯请求,在页面抓包工具中找包

就这一个,是get请求,参数在payload里面去看,记下请求的url

import requests

import json

num = input("你先要几部恐怖片")

url = 'https://movie.douban.com/j/chart/top_list?'

#记住参数要封装在字典中

param = {'type':'20',

'interval_id':'100:90',

'action':'',

'start': '0',#分析一下我们知道是从第几个电影开始取

'limit':f'{num}'}#限制取几个电影

handers = {'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/97.0.4692.99 Safari/537.36 Edg/97.0.1072.69'}

res = requests.get(url,headers=handers,params=param)

print(res.json())

with open('./恐怖电影.json','w',encoding='utf-8') as fp:

json.dump(res.json(),fp=fp,ensure_ascii=False)

然后我又优化了一下,可以选种类,指定电影的数量,输出的时候只输出名字和网址

import requests

import json

print('''

[['纪录片', '音乐', 1], ['剧情', '传记', '历史', 2], ['犯罪', '剧情', 3], ['剧情', '历史', '战争', 4], ['喜剧', '动作', '爱情', 5], ['剧情', '战争', '情色', 6], ['剧情', '歌舞', 7]]

[['剧情', '犯罪', '悬疑', 10], ['犯罪', '剧情', 11], ['剧情', '爱情', '灾难', 12], ['剧情', '爱情', '同性', 13], ['音乐', 14], ['剧情', '科幻', '冒险', 15],

['剧情', '动画', '奇幻', 16], ['剧情', '科幻', '冒险', 17], ['运动', 18], ['剧情', '犯罪', '惊悚', 19]],[['悬疑', '惊悚', '恐怖', 20]]

[['剧情', '喜剧', '爱情', '战争', 22], ['科幻', '动画', '短片', 23], ['剧情', '喜剧', '爱情', '战争', 24], ['剧情', '动画', '奇幻', 25], ['剧情', '爱情', '同性', 26], ['冒险', '西部', 27],

['剧情', '爱情', '家庭', 28], ['爱情', '奇幻', '武侠', '古装', 29], ['剧情', '动画', '奇幻', '古装', 30], ['剧情', '黑色电影', 31]]

''')

type = input("你要什么类型的电影")

num = input("你要几部这样的电影,按照评分排序")

url = 'https://movie.douban.com/j/chart/top_list?'

#记住参数要封装在字典中

param = {'type':f'{type}',#电影的种类

'interval_id':'100:90',

'action':'',

'start': '0',#分析一下我们知道是从第几个电影开始取

'limit':f'{num}'}#限制取几个电影

handers = {'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/97.0.4692.99 Safari/537.36 Edg/97.0.1072.69'}

res = requests.get(url,headers=handers,params=param)

for item in range(0,int(num)):

print(res.json()[item].get('title'),res.json()[item].get('url'))

#with open('./恐怖电影.json','w',encoding='utf-8') as fp:

# json.dump(res.json(),fp=fp,ensure_ascii=False)

#[['纪录片', '音乐', 1], ['剧情', '传记', '历史', 2], ['犯罪', '剧情', 3], ['剧情', '历史', '战争', 4], ['喜剧', '动作', '爱情', 5], ['剧情', '战争', '情色', 6], ['剧情', '歌舞', 7]]

#[['剧情', '犯罪', '悬疑', 10], ['犯罪', '剧情', 11], ['剧情', '爱情', '灾难', 12], ['剧情', '爱情', '同性', 13], ['音乐', 14], ['剧情', '科幻', '冒险', 15],

# ['剧情', '动画', '奇幻', 16], ['剧情', '科幻', '冒险', 17], ['运动', 18], ['剧情', '犯罪', '惊悚', 19]],[['悬疑', '惊悚', '恐怖', 20]]

#[['剧情', '喜剧', '爱情', '战争', 22], ['科幻', '动画', '短片', 23], ['剧情', '喜剧', '爱情', '战争', 24], ['剧情', '动画', '奇幻', 25], ['剧情', '爱情', '同性', 26], ['冒险', '西部', 27],6.

# ['剧情', '爱情', '家庭', 28], ['爱情', '奇幻', '武侠', '古装', 29], ['剧情', '动画', '奇幻', '古装', 30], ['剧情', '黑色电影', 31]]

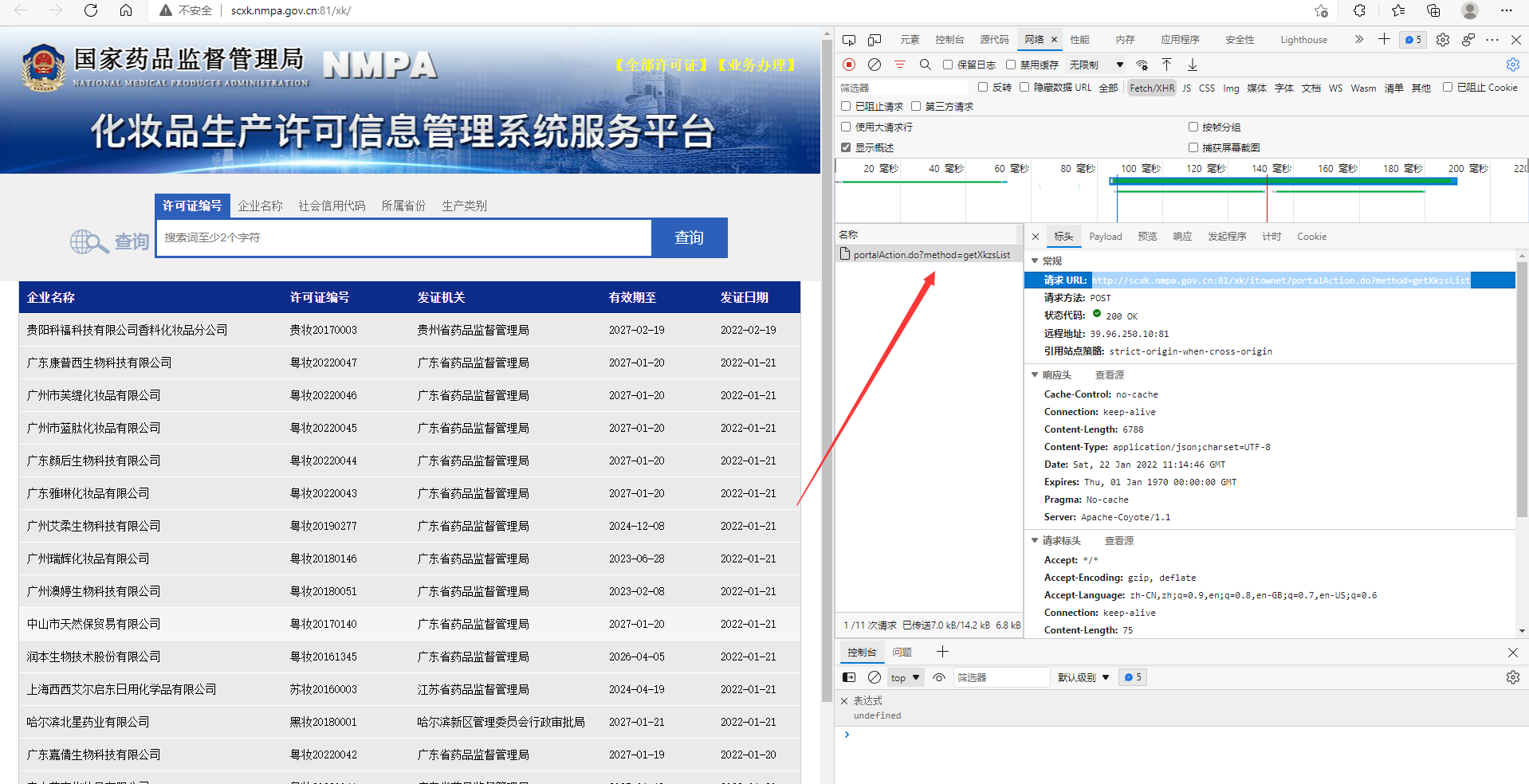

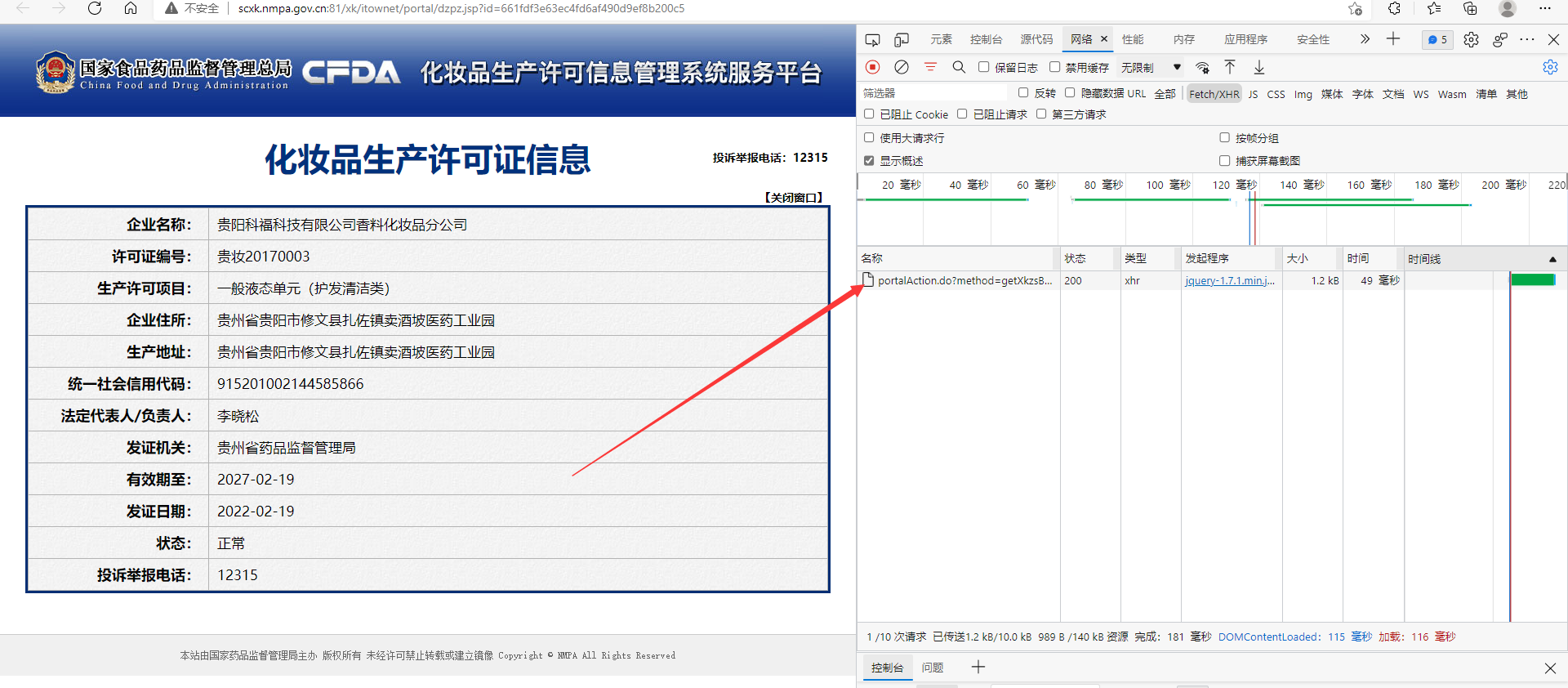

综合:国家药品监督管理总局中基于中华人民共各国化妆品生产许可相关数据(动态加载)

首先我们发现这下面的数据都是阿贾克斯数据包动态加载出来的

然后每一个公司的详细信息也是动态加载出来的

那就可以开始写代码了,主要思路就是拼接url上的id

import requests

page = input("请输入你要爬取第几页的信息: ")

handers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/97.0.4692.99 Safari/537.36 Edg/97.0.1072.69'}

url = 'http://scxk.nmpa.gov.cn:81/xk/itownet/portalAction.do?method=getXkzsList'

param = {

'method': 'getXkzsList',

'on': 'true',

'page': f'{page}',

'pageSize': '15',

'productName':'' ,

'conditionType': '1',

'applyname':'',

'applysn': ''

}

#首先获取公司和他们的id

res = requests.post(url,headers=handers)

name_id = dict()

for i in range(len(res.json().get('list'))):

id = res.json().get('list')[i].get('ID')

name = res.json().get('list')[i].get('EPS_NAME')

name_id[name] = id

for index,item in enumerate(name_id.keys()):

print(index,item)

#问要看哪个公司的信息

company = input("你想看哪个公司的信息,请输入那个公司的名字,懒得输请自己复制,序号功能太难弄了: ")

param2 = {

'method': 'getXkzsById',

'id': name_id.get(f'{company}')

}

res2 = requests.post('http://scxk.nmpa.gov.cn:81/xk/itownet/portalAction.do',headers=handers,params=param2)

print(res2.json())

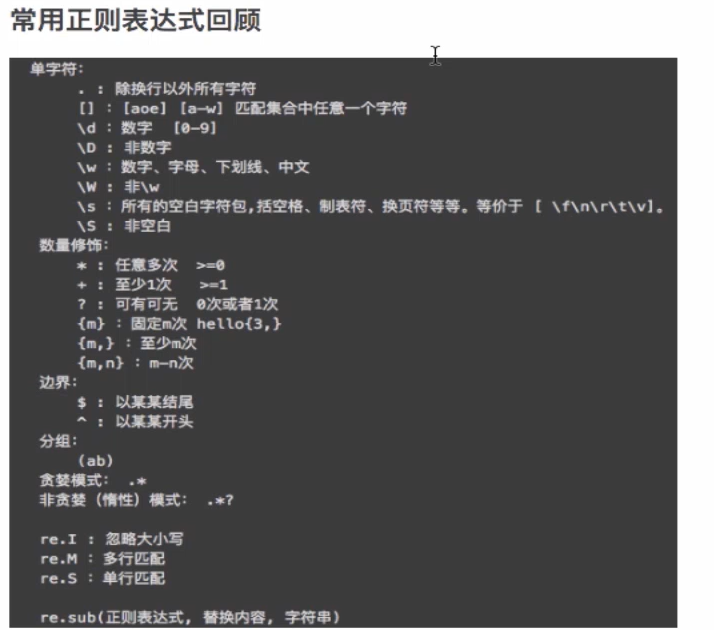

2.数据解析

聚焦爬虫:爬取页面中指定的页面内容

数据解析分类:

·正则

·bs4

·xpath(重点)

数据解析原理概述:

解析的局部的文本内容都会在标签之间或者标签对应的属性中进行存储

进行指定标签的定位

标签或者标签对应属性中存储的数据值进行提取,这个过程就叫解析。

2.1使用正则来进行数据解析

2.2bs4解析(python独有的方式)

bs4数据解析的原理:

1.实例化一个BeautifuSoup的对象,并且将页面源码数据加载到该对象中。

2.通过调用BeautifuSoup对象中相关的属性或者方法进行标签定位和数据提取。

pip install bs4

2.2.1示例:实例化BeautifulSoup

from bs import BeautifulSoup

fp = open('./test.thml','r',encoding='utf-8')

soup = BeautifulSoup(fp,'lxml')

示例二:将互联网上获取的页面源码加载到该对象中

from bs import BeautifulSoup

page_text = page.taxt

soup = BeautifulSoup(page_text,'lxml')

2.2.2相关方法和属性

beautifulSoup常用方法 - Crush999 - 博客园 (cnblogs.com)

soup.a#获取其中的a标签,只获取第一个 soup.tagName,返回第一次出现的那个标签

soup.find('a'):#返回a标签的值

soup.find('div',class_='abc')#返回属性是abc的biv标签

soup.find_all('div')#返回所有的标签是div的标签,就是返回符合标准的所有标签。(列表)

soup.select('.asd')#这里面的.是类选择器,这个select函数里面装选择器,返回复合条件的标签(列表)

soup.select('.asd > url > a')[2]#定位asd类下的url标签中的a标签,返回的是列表,想要哪个标签就用列表的索引。>表示的是一个层级

soup.select('.asd > url a')#空格表示的是多个层级

soup.a.text/string/get_text()#后面三个都是获取a标签中的的文本内容的意思

text/get_text()#可以获取某一个标签中所有的文本内容

2.3xpath解析

是最常用最便携的高效解析方式

解析原理:

实例化 etree对象,且需要将被解析的页面源码加载到该对象中。

调用etree对象中的xpath方法结合着xpath表达式实现标签的定位和内容的捕获

pip install lxml

实例化对象

form lxml import etree

tree = etree.parse(filePath)

or

tree = etree.HTML('page_text')

2.3.1常用xpath表达式

tree.xpath('/html/head/title')#在html标签中找head标签中找title标签。返回一个列表,element类型的对象。如果是两个//那就是多个层级

上面的表达式就是也可以表示成这样

#tree.xpath('/html//title')

#tree.xpath('//title')这个表示源码中所有的title标签,从任意位置开始定位。

r = tree.xpath('//title[@class = "song"]')#实现属性定位

r = tree.xpath('//title[@class = "song"]/p[3]')#实现索引定位,他这个索引是从一开始的,ε=(´ο`*)))唉,能不能统一一下啊。注意那个p是标签名字

tree.xpath('/html//title/text()')#就可以取到文本内容,返回的是一个列表

tree.xpath('/html//title//text()')#就可以取到里面所有的文本内容,返回的是一个列表

tree.xpath('/html//title/@属性名')#取属性

注意在表达式中用|管道符表示或者

import requests

from bs4 import BeautifulSoup#导入模块

url="https://www.unjs.com/lunwen/f/20191111001204_2225087.html"

headers= {

'User-Agent': "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/87.0.4280.67 Safari/537.36 Edg/87.0.664.47"

}#模拟的服务器头

html = requests.get(url,headers=headers)

page.encoding = 'utf-8'#防止乱码

(36条消息) scrapy爬取小说时换行问题_Keyu-CSDN博客_python爬小说换行

验证码绕过的时候就用云打码平台

实例,爬取图片,还有个爬小说的我就不放出来了

from lxml import etree

import requests

import os

print("米娜这个东西要开全局代理才能看呢!")

handers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/97.0.4692.99 Safari/537.36 Edg/97.0.1072.69'}

while True:

page = input("大家好越江又来送好东西了,这次是图片哦,每一页有三十四个系列的图片,最多八十页哦!请输入您要的页数: ")

if int(page) > 80 or int(page) <= 0:

print("不要就不要,乱输什么数字啊!")

else:

break

if not os.path.exists("好东西2"):

print('正在爬取网址')

os.makedirs('好东西2')

pic_dict = dict()

for pagex in range(1, int(page) + 1):

try:

url = f'http://www.52rosi.com/?action=ajax_post&pag={pagex}'

res = requests.get(url,headers=handers)

res.encoding='utf-8'

page_text = res.text

tree = etree.HTML(page_text)

print(f'{pagex / int(page) * 100}%...正在爬取网址')

for index in range(1,33):

pic_nam = tree.xpath(f'//div[@class="post-home"][{index}]//span/a/@title')

pic_name = pic_nam[0].replace("上的评论",' ')

pic_url = tree.xpath(f'//div[@class="post-home"][{index}]//span/a/@href')

pic_dict[pic_name] = pic_url[0]

except:

print(f'第{page}页出错了')

print(res.status_code)

with open('./好东西2/各个网址.txt',mode='w',encoding='utf-8') as fp:

fp.write(str(pic_dict))

tip = 0

for item_name,item_url in pic_dict.items():

tip += 1

print(f"{tip / len(pic_dict.keys()) * 100}%正在帮你下载图片")

os.makedirs(f'./好东西2/{item_name}')

res = requests.get(item_url,headers=handers)

tree = etree.HTML(res.text)

url_list = tree.xpath('//div[@id="wrap"]//dt[@class="gallery-icon"]/a/@href')

for index,urlx in enumerate(url_list):

res = requests.get(urlx, headers=handers)

with open(f"./好东西2/{item_name.strip()}/{index}.jpg",mode='wb') as f:

f.write(res.content)

else:

print("把当前目录下的“好东西”文件夹删了,我才好给你资源啊!")

3.高性能异步爬虫

3.1异步爬虫的方式

·多线程,多进程(不建议)

·线程池,进程池(建议)

下面线程池的使用

import time

# 导入线程池所对应的类

from multiprocessing import Pool

#模拟下载

def get_page(str):

print("正在下载", str)

time.sleep(2)

print("下载成功", str)

if __name__ == '__main__':

listx = ['aa','ss','vv','bb']

#实例化一个线程池对象

pool = Pool(4)#创建了四个线程

#将列表中每一个元素传递个get

pool.map(get_page,listx)

Python中用requests处理cookies的3种方法 - 王昭君的昭 - 博客园 (cnblogs.com)

3.2单线程加异步协程

3.2.1基本概念

(36条消息) python多任务—协程(一)_夜风晚凉的博客-CSDN博客_python 协程

event_loop:事件循环

coroutine:协程对象

task:任务

future:代表将来执行或还没有执行的任务,实际上和没有本质区别

asunc:定义一个协程

await:用来挂起阻塞方法的执行。

3.2.2相关操作

import asyncio

async def request(url):

print(f'正在请求的url是{url}')

print('请求成功')

#async修饰的函数,调用之后返回的一个协程对象

c = request('www.baidu.com')

#创建一个事件循环对象

loop = asyncio.get_event_loop()

#将协程对象注册到loop中,然后启动loop

loop.run_until_complete(c)

'''

正在请求的url是www.baidu.com

请求成功

'''

import asyncio

async def request(url):

print(f'正在请求的url是{url}')

print('请求成功')

#async修饰的函数,调用之后返回的一个协程对象

#task的使用

loop = asyncio.get_event_loop()

#基于loop创建了一个task任务对象

task = loop.create_task(c)

print(task)#还没有被执行

loop.run_until_complete(task)

print(task)#被执行了

#future的使用

loop = asyncio.get_event_loop()

task = asyncio.ensure_future(c)

loop.run_until_complete(task)

print(task)

绑定回调

import asyncio

async def request(url):

print(f'正在请求的url是{url}')

print('请求成功')

return url

#async修饰的函数,调用之后返回的一个协程对象

c = request('www.baidu.com')

def callback_func(task):

print(task.result())#result函数返回执行函数的返回值

#绑定回调

loop = asyncio.get_event_loop()

task = asyncio.ensure_future(c)

#将回调函数绑定到任务对象中

task.add_done_callback(callback_func)

loop.run_until_complete(task)

'''

正在请求的url是www.baidu.com

请求成功

www.baidu.com

'''

#多任务异步协程

import asyncio

import time

#调用这个async修饰的函数就会返回一个协程对象

async def request(url):

print('正在下载',url)

#在异步协程中如果出现了同步模块相关的代码,那么就无法实现异步

#time.sleep(2)

#当在asyncio中遇到阻塞操作必须进行手动挂起

await asyncio.sleep(2)

print('下载完毕',url)

start = time.time()

urls = [

'www.baidu.com',

'www.sogou.com',

'www.goubanjia.com'

]

#任务列表,存放多个任务对象

stasks = []

for url in urls:

c = request(url)

#将协程对象封装到任务对象当中

task = asyncio.ensure_future(c)

stasks.append(task)

loop = asyncio.get_event_loop()

loop.run_until_complete(asyncio.wait(stasks))#必须要把这个列表封装到wait方法中,固定写法

print(time.time()-start)

3.2.3aiohttp模块

注意request模块是同步的,在异步协程中就不能使用request模块了,我们要使用基于异步的网络请求模块。

import aiohttp

#使用该模块中的ClientSession对象

async with aiohttp.ClientSession() as session:

#get()/post()

#headers,params/data,proxy = 'http://ip:port'

async with await session.get(url) as response:#阻塞操作必须使用await关键字手动挂起

page_text = await response.text()#text方法可以返回字符串形式的响应数据,这里获取响应数据操作之前也必须使用await进行手动挂起

#read()方法返回的是二进制形式的响应数据

#json()方法返回的就是json对象

print(page_text)

4.selenium模块

4.1主要作用

selenium模块可以很便捷的获取网站中动态加载的数据。

selenium也可以十分便捷的实现模拟登陆

什么是selenium?

基于浏览器自动化的一个模块。

4.2selenium初试

使用这个模块,还需要一个浏览器的驱动程序

Selenium3 + Python3:安装selenium浏览器驱动 - 知乎 (zhihu.com)

4.3常用方法

selenium模块 - linhaifeng - 博客园 (cnblogs.com)

我不怎么用就偷下懒啦(^▽^)

5.scrapy框架

什么是框架?

就是一个集成了很多功能并且具有很强通用性的一个项目模板。

如何学习框架?

专门学习框架封装的各种功能的详细用法。

5.1环境安装

windows:

pip install wheel

下载twisted,下载地址为http://www.lfd.uci.edu/~gohlke/pythonlibs/#twisted

安装twisted,pip install Twisted-17.1.0-cp36-cp36m-win_amd64.whl

pip install pywin32

pip install scrapy

测试:在终端输入scrapy指令,没有报错即表示安装成功!

5.2基本使用

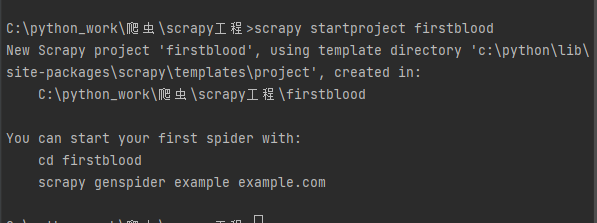

创建一个工程:用terminal

scrapy startproject xxx

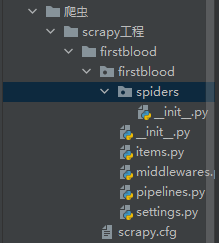

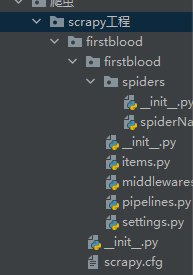

下面的scrapy.cfg文件就是他的配置文件,里面的spider文件夹我们一般称为爬虫文件夹。

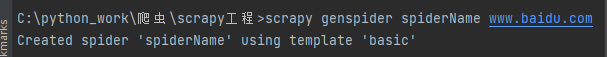

在spider字母里中创建一个爬虫文件

·scrapy genspider spiderName www.xxx.com

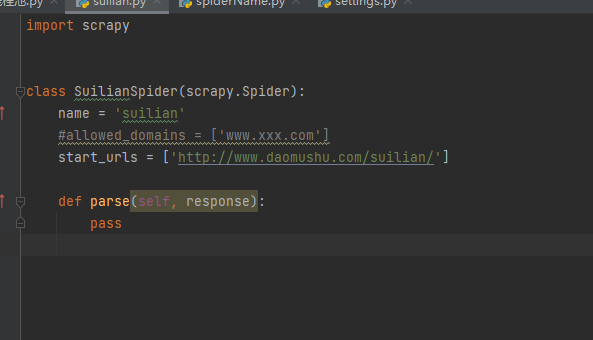

分析一下创建的爬虫文件

import scrapy

class SpidernameSpider(scrapy.Spider):#他的父类是spider

#爬虫文件的名称:就是爬虫源文件的一个唯一标识,不能重复

name = 'spiderName'

#允许的域名:用来限定start urls列表中那些url可以进行请求发送,但是我们通常情况下我们不会使用这个限制。

allowed_domains = ['www.baidu.com']

#起始的url列表:该列表中存放的url会被scrapy自动进行请求的发送

start_urls = ['http://www.baidu.com/']

#用作于数据解析,response参数表示的就是请求成功后对应的响应对象,parse调用一次只会接受一个调用response

def parse(self, response):

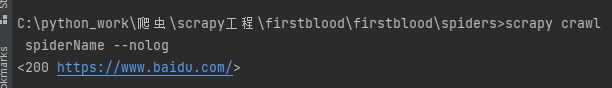

print(response)#这个是后面我自己加的,实验用的

执行工程

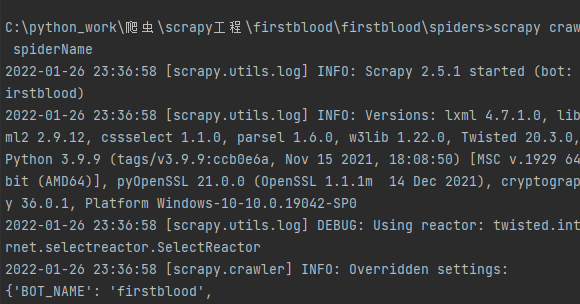

scrapy crawl spiderName

执行上述爬虫文件之后会出现一堆日志,但是没有输出response。

重要输出

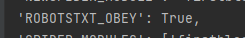

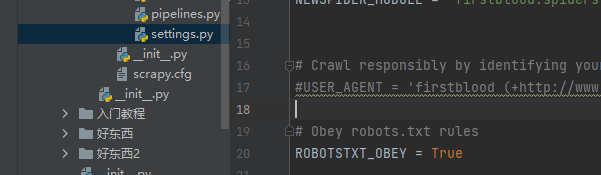

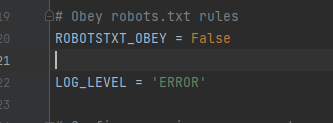

也就是我们尊从了robots协议的,也就是其他的东西我们就爬不到了,先给他改成false。

但是那么多日志信息打扰了我们的查询,我们用–nolog参数可以消除参数

但是如果一味的使用nolog的话,我们反而有些时候看不到报错的信息,那该怎么办呢

在setting中加一行 LOG_LEVEL = ‘ERROR’

让我们输出指定类型的日志信息,这样我们就只看得到报错的日志了。

创建一个工程:用terminal

scrapy startproject xxx

在spider字母里中创建一个爬虫文件

·scrapy genspider spiderName www.xxx.com

执行工程

scrapy crawl spiderName

LOG_LEVEL = 'ERROR'

setting里面也有ua伪装

想要拿到selector里面的data值,就用.extract()对象

如果列表调用了extract,则表示将每一个selector对象中data对应的字符串提取了出来。

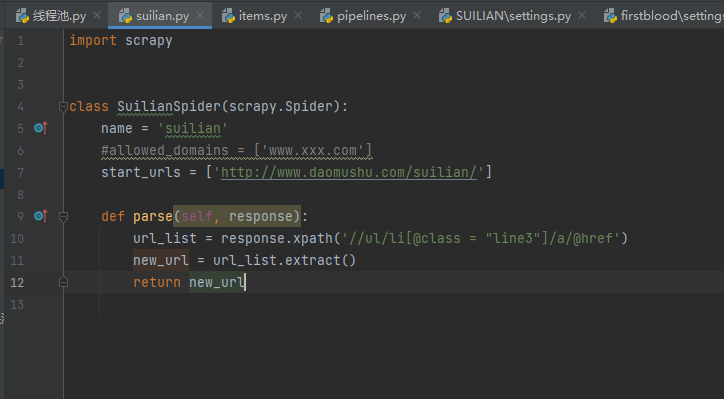

5.3数据解析

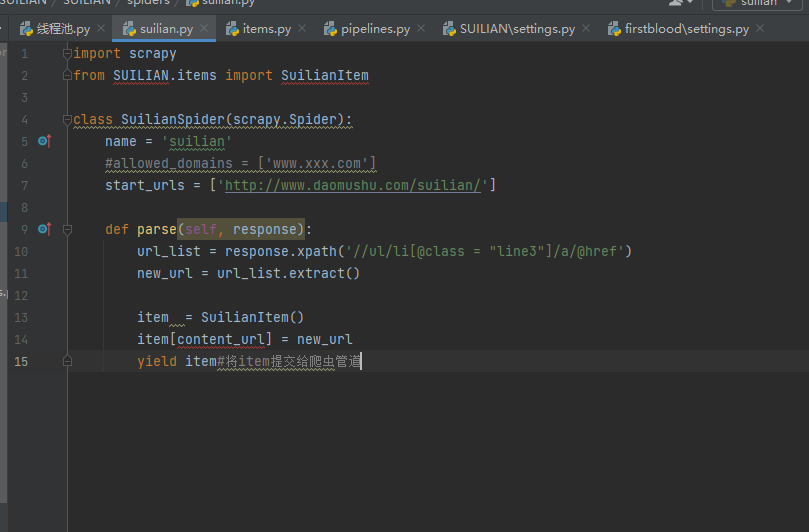

下面用一个实例来介绍一下数据解析,爬取一下《碎脸》

碎脸小说(鬼古女)_碎脸全文免费阅读_盗墓小说网 (daomushu.com)

首先创建创建一个工程,

然后我们就可以用parse方法进行数据解析就可以了

在这个框架中,我们直接在response.xpth()进行数据解析。返回的是一个列表,里面的元素是selector对象,想要拿到里面的data值,就用.extract()对象

那就简单了啊!开始解析!

import scrapy

class SuilianSpider(scrapy.Spider):

name = 'suilian'

#allowed_domains = ['www.xxx.com']

start_urls = ['http://www.daomushu.com/suilian/']

def parse(self, response):

url_list = response.xpath('//ul/li[@class = "line3"]/a/@href')

new_url = url_list.extract()

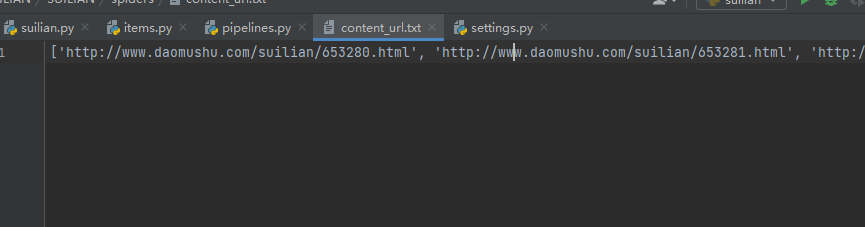

这样就把所有的url解析出来了,但是我还没有学到用scrapy再次利用url,不可能在这里面再次用一下request模块,就先把这个url给爬下来就可。

5.4数据持久化存储

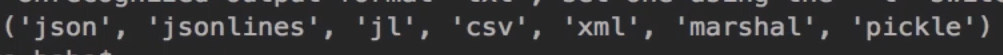

5.4.1基于终端指令

要求只可以将parse方法的返回值存储到本地的文本文件中。

scrapy crawl xxx -o ./xxx.csv#在执行的时候-o就可以把parse的返回值存储到当前文件夹下面的xxx.csv中。只支持这些文件类型

5.4.2基于管道(重点)

编码流程:

1.数据解析

2.在item类中定义相关的属性

3.将解析的数据封装到item类型的对象中

4.将item类型的对象提交给管道进行持久化存储的操作

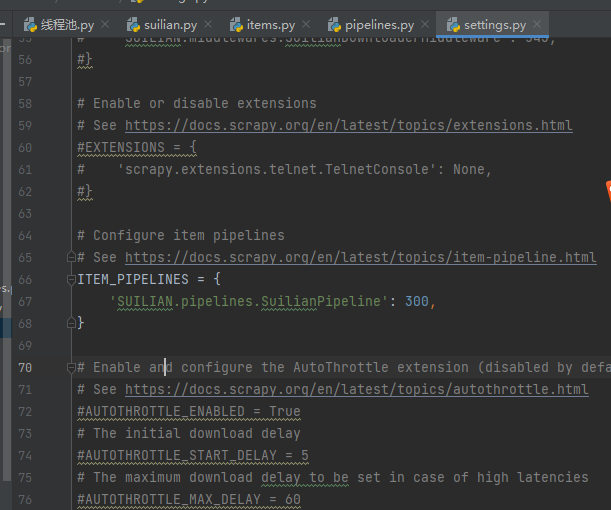

5.在配置文件中开启管道

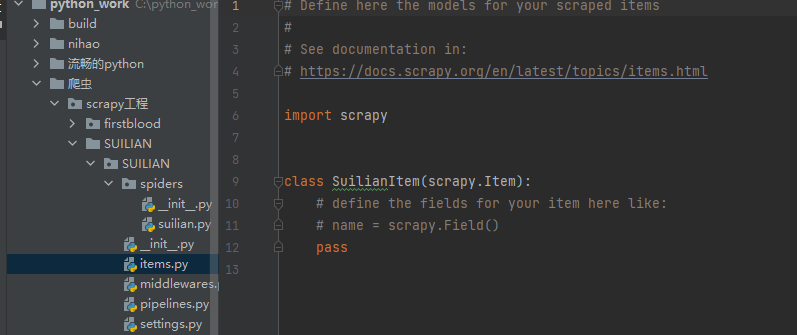

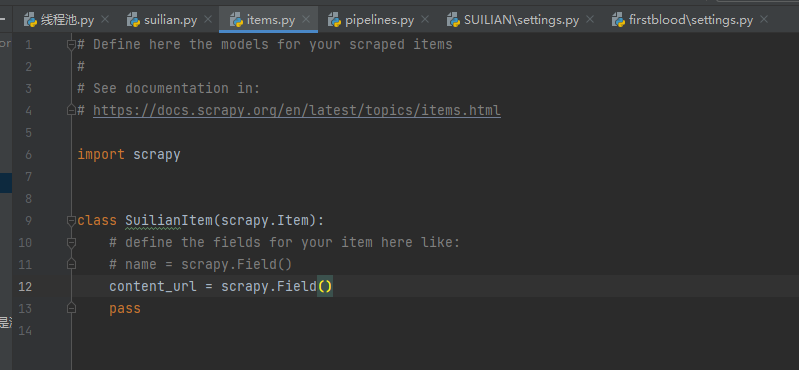

在创建的工程中,有一个items文件,在里面给我们定义了一个类

但是并没有定义属性,我们就可以用name = scrapy.Field()来定义我们的属性。

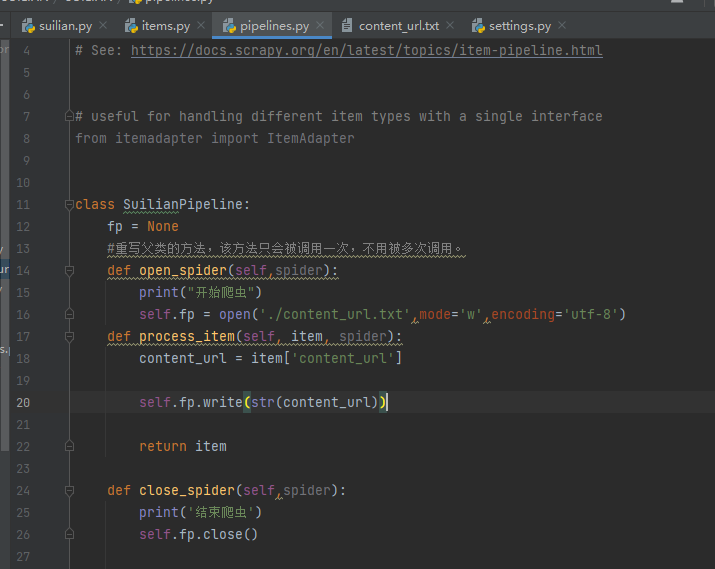

同样也有一个pipelines文件,里面有个类,就是专门用来处理(持久化存储)item对象的。

里面的方法可以接受爬虫文件提交过来的item对象

下面我演示一下处理刚刚得到的碎脸小说url。

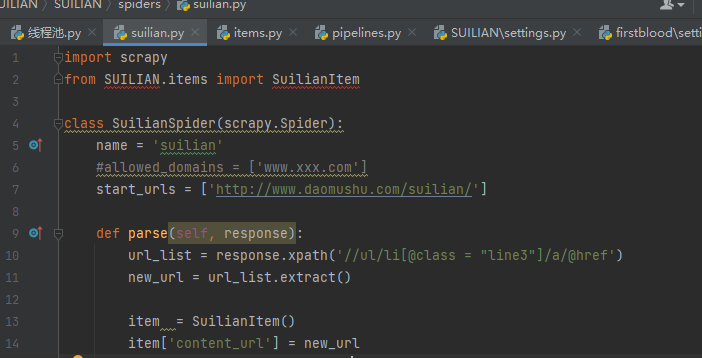

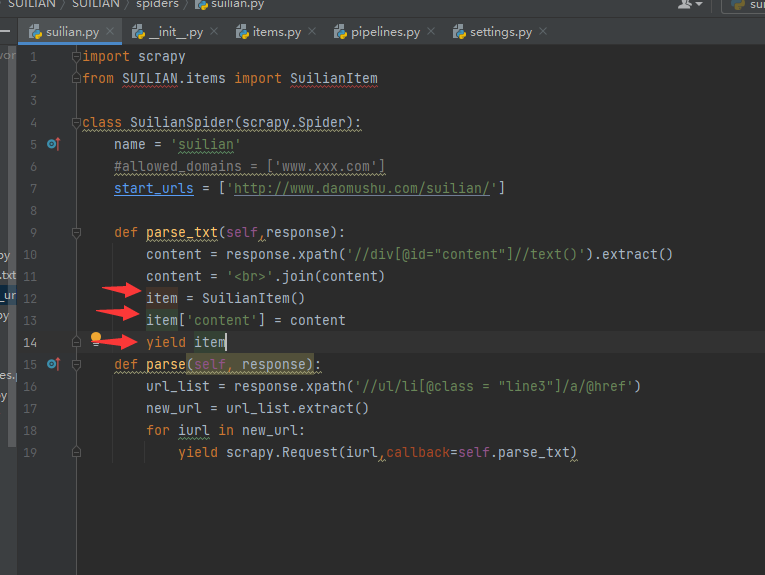

5.4.2.1实例

首先解析到数据

然后在item类中定义相关属性

将解析的数据封装到item类型的对象中(记得导入item类)

将item类型的对象提交给管道,其中processitem方法,只要接收到一个item就会被调用一次

在管道文件中进行持久化操作

在设置中开启管道的注释

三百表示优先级,数值越小优先级越高

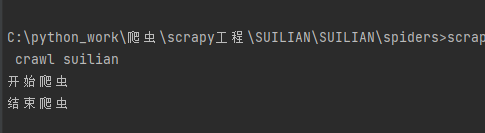

最后运行,发现数据以及存储成功

5.2.3面试题,要把一份数据存到本地一份存到数据库

那我们可以在管道类中多定义一份类,把他存放到数据库中。

setting中谁的优先级最高谁先接受item,为了保证后面执行的管道类也能够拿到item,在process_Item中也得return一个item。

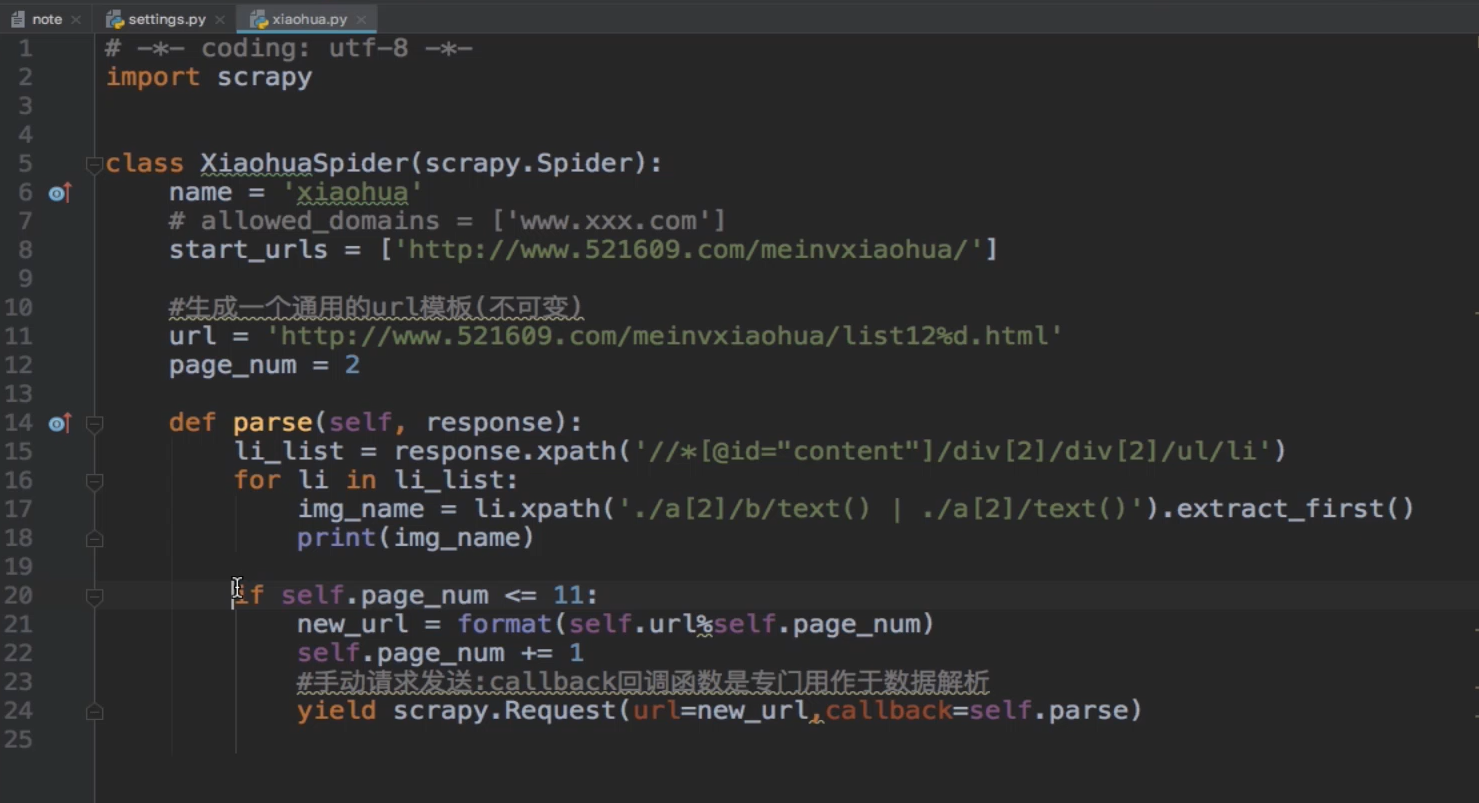

5.3全站数据爬取

就是将网站中某板块下的全部页码对应的页面数据进行爬取。

这样会将所有页面中的xpath表达式表示的数据进行爬爬取。

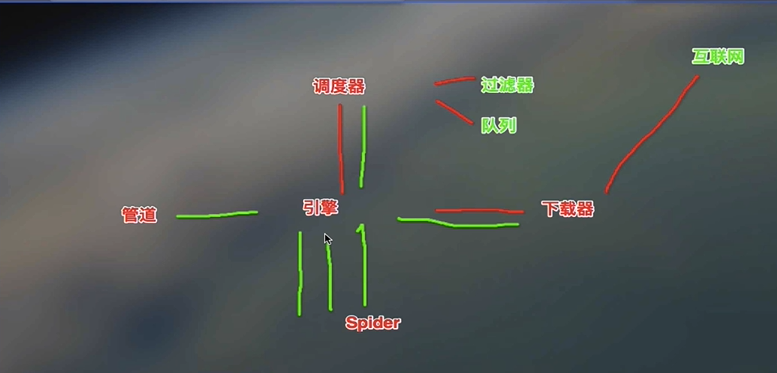

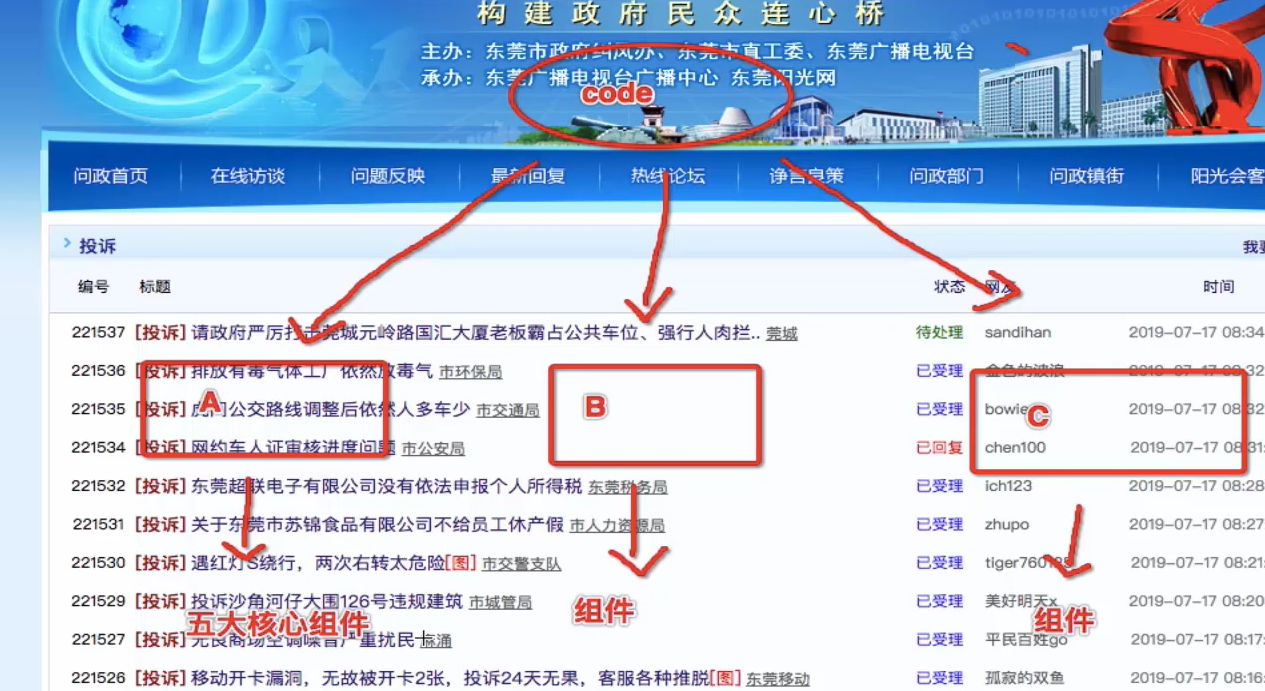

5.4五大核心组件

5.5请求传参(深度爬取)

使用场景:如果爬取解析的数据不再同一张页面中

要使用深度爬取就要用

yield scrapy.Reuests(xxx,callback=self.zidingyifangfa)

为什么不直接用xpath解析呢?因为在首页我们就已经使用了xpath了,为了防止起冲突,我们要额外定义一个方法来实现详情页的数据解析。

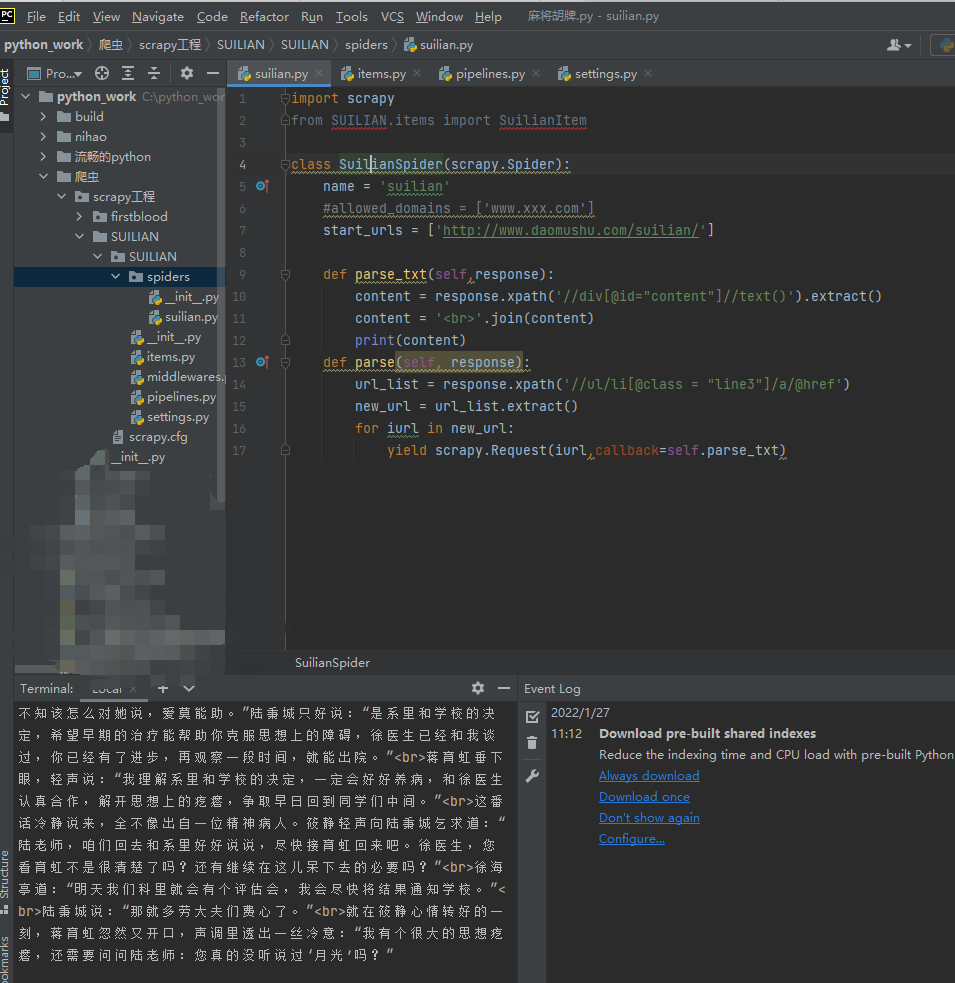

那么现在我们就来完善我们的碎脸爬虫

首先我们迭代列表,然后自己写了个方法获取到了小说文本,现在只需要把他们弄到管道存起来就好了。

import scrapy

from SUILIAN.items import SuilianItem

class SuilianSpider(scrapy.Spider):

name = 'suilian'

#allowed_domains = ['www.xxx.com']

start_urls = ['http://www.daomushu.com/suilian/']

def parse_txt(self,response):

content = response.xpath('//div[@id="content"]//text()').extract()

content = '<br>'.join(content)

print(content)

def parse(self, response):

url_list = response.xpath('//ul/li[@class = "line3"]/a/@href')

new_url = url_list.extract()

for iurl in new_url:

yield scrapy.Request(iurl,callback=self.parse_txt)

item文件中定义一个新的属性

# Define here the models for your scraped items

#

# See documentation in:

# https://docs.scrapy.org/en/latest/topics/items.html

import scrapy

class SuilianItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

content_url = scrapy.Field()

content = scrapy.Field()#新定义的属性

pass

在爬虫文件中把内容传入到item类中去,并且给属性赋值,然后将数据提交给管道。

随后我们就爬取到了碎脸小说

下面放一下各个文件的源码

suilian.py

import scrapy

from SUILIAN.items import SuilianItem

class SuilianSpider(scrapy.Spider):

name = 'suilian'

#allowed_domains = ['www.xxx.com']

start_urls = ['http://www.daomushu.com/suilian/']

def parse_txt(self,response):

content = response.xpath('//div[@id="content"]//text()').extract()

content = '<br>'.join(content)

item = SuilianItem()

item['content'] = content

yield item

def parse(self, response):

url_list = response.xpath('//ul/li[@class = "line3"]/a/@href')

new_url = url_list.extract()

for iurl in new_url:

yield scrapy.Request(iurl,callback=self.parse_txt)

setting

# Scrapy settings for SUILIAN project

#

# For simplicity, this file contains only settings considered important or

# commonly used. You can find more settings consulting the documentation:

#

# https://docs.scrapy.org/en/latest/topics/settings.html

# https://docs.scrapy.org/en/latest/topics/downloader-middleware.html

# https://docs.scrapy.org/en/latest/topics/spider-middleware.html

BOT_NAME = 'SUILIAN'

SPIDER_MODULES = ['SUILIAN.spiders']

NEWSPIDER_MODULE = 'SUILIAN.spiders'

# Crawl responsibly by identifying yourself (and your website) on the user-agent

USER_AGENT = 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/97.0.4692.99 Safari/537.36 Edg/97.0.1072.69'

# Obey robots.txt rules

ROBOTSTXT_OBEY = False

LOG_LEVEL = 'ERROR'

# Configure maximum concurrent requests performed by Scrapy (default: 16)

#CONCURRENT_REQUESTS = 32

# Configure a delay for requests for the same website (default: 0)

# See https://docs.scrapy.org/en/latest/topics/settings.html#download-delay

# See also autothrottle settings and docs

#DOWNLOAD_DELAY = 3

# The download delay setting will honor only one of:

#CONCURRENT_REQUESTS_PER_DOMAIN = 16

#CONCURRENT_REQUESTS_PER_IP = 16

# Disable cookies (enabled by default)

#COOKIES_ENABLED = False

# Disable Telnet Console (enabled by default)

#TELNETCONSOLE_ENABLED = False

# Override the default request headers:

#DEFAULT_REQUEST_HEADERS = {

# 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

# 'Accept-Language': 'en',

#}

# Enable or disable spider middlewares

# See https://docs.scrapy.org/en/latest/topics/spider-middleware.html

#SPIDER_MIDDLEWARES = {

# 'SUILIAN.middlewares.SuilianSpiderMiddleware': 543,

#}

# Enable or disable downloader middlewares

# See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html

#DOWNLOADER_MIDDLEWARES = {

# 'SUILIAN.middlewares.SuilianDownloaderMiddleware': 543,

#}

# Enable or disable extensions

# See https://docs.scrapy.org/en/latest/topics/extensions.html

#EXTENSIONS = {

# 'scrapy.extensions.telnet.TelnetConsole': None,

#}

# Configure item pipelines

# See https://docs.scrapy.org/en/latest/topics/item-pipeline.html

ITEM_PIPELINES = {

'SUILIAN.pipelines.SuilianPipeline': 300,

}

# Enable and configure the AutoThrottle extension (disabled by default)

# See https://docs.scrapy.org/en/latest/topics/autothrottle.html

#AUTOTHROTTLE_ENABLED = True

# The initial download delay

#AUTOTHROTTLE_START_DELAY = 5

# The maximum download delay to be set in case of high latencies

#AUTOTHROTTLE_MAX_DELAY = 60

# The average number of requests Scrapy should be sending in parallel to

# each remote server

#AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0

# Enable showing throttling stats for every response received:

#AUTOTHROTTLE_DEBUG = False

# Enable and configure HTTP caching (disabled by default)

# See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings

#HTTPCACHE_ENABLED = True

#HTTPCACHE_EXPIRATION_SECS = 0

#HTTPCACHE_DIR = 'httpcache'

#HTTPCACHE_IGNORE_HTTP_CODES = []

#HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage'

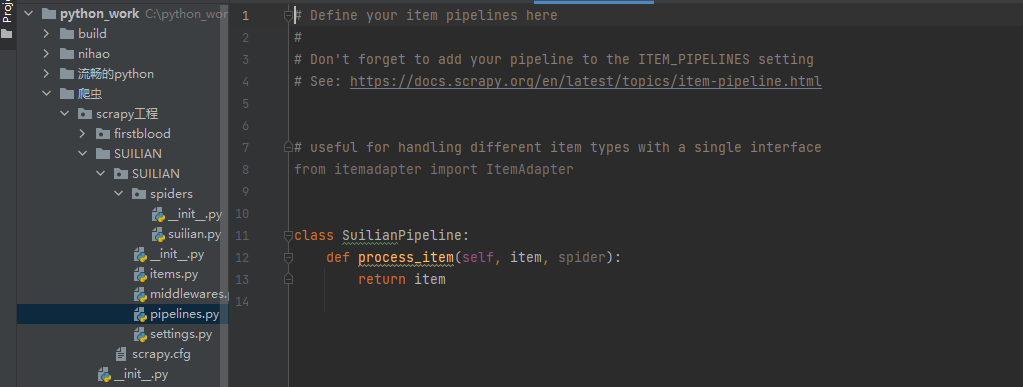

pipelines

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: https://docs.scrapy.org/en/latest/topics/item-pipeline.html

# useful for handling different item types with a single interface

from itemadapter import ItemAdapter

class SuilianPipeline:

fp = None

#重写父类的方法,该方法只会被调用一次,不用被多次调用。

def open_spider(self,spider):

print("开始爬虫")

self.fp = open('./content.txt',mode='w',encoding='utf-8')

def process_item(self, item, spider):

content = item['content']

self.fp.write(str(content))

return item

def close_spider(self,spider):

print('结束爬虫')

self.fp.close()

items

# Define here the models for your scraped items

#

# See documentation in:

# https://docs.scrapy.org/en/latest/topics/items.html

import scrapy

class SuilianItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

content_url = scrapy.Field()

content = scrapy.Field()

pass

5.6图片爬取

在我们的管道类中给我们封装了一个ImagesPipeline

字符串:只需要基于xpath进行解析提交管道进行持久化存储。

图片:xpath解析出图片src的属性值,单独的对图片地址发起请求获取图片二进制类型的数据。

而我们的ImagesPipeline

只需要那个img的src的属性值进行解析,提交到管道,管道就会对图片的src进行请求发送获取图片的二进制类型的数据,且还会帮我们进行持久化存储。

使用流程:

python网络爬虫之使用scrapy爬取图片 - 一张红枫叶 - 博客园 (cnblogs.com)

嘿嘿我还是更喜欢使用requests模块,这个scrapy感觉更麻烦了,可能也是我没有掌握到这个框架的精髓,以后再来慢慢学习。

6.分布式爬虫&增量式爬虫

6.1分布式爬虫

概念:我们需要搭建一个分布式的机群,让其对一组资源进行分布式联合爬取。

作用:提升爬取数据的效率。

如何实现分部试爬虫?

下载个scrapy-redis的组件

这个就是基本的一个结构

为什么原生的scrapy不可以实现分部式爬虫?

调度器不可以被分布式机群共享

管道不可以被分布式机群共享

使用scrapy实现分布式爬虫 - 一只小小的寄居蟹 - 博客园 (cnblogs.com)

6.2增量式爬虫

增量式爬虫 - 笑得好美 - 博客园 (cnblogs.com)

转载请注明来源,欢迎对文章中的引用来源进行考证,欢迎指出任何有错误或不够清晰的表达。后续可能会有评论区,不过也可以在github联系我。